AI Event Co‑host – Case Study

In April 2025 I stepped onto a very different stage at the National Instruments Leadership Forum—this time behind the sound console instead of the podium. My brief: build and operate an AI voice assistant that could co‑host the entire event live, fielding audience questions and delivering scripted segments alongside the human MC.

"First time wrangling mixers, talk‑back mics, and OpenAI latencies—

the stakes were high, the green‑room Wi‑Fi was questionable, and the bot still nailed 84 % of its lines."

Project Stats

- ⏱️ Build time: 4 days from brief to on‑stage demo

- 🎤 Live Q&A handled: 4 audience queries

- 🧑🤝🧑 Attendee satisfaction: 94 % rated the AI co‑host "useful"

- 🇮🇳 First AI stage co‑host deployment in India

Behind the Scenes

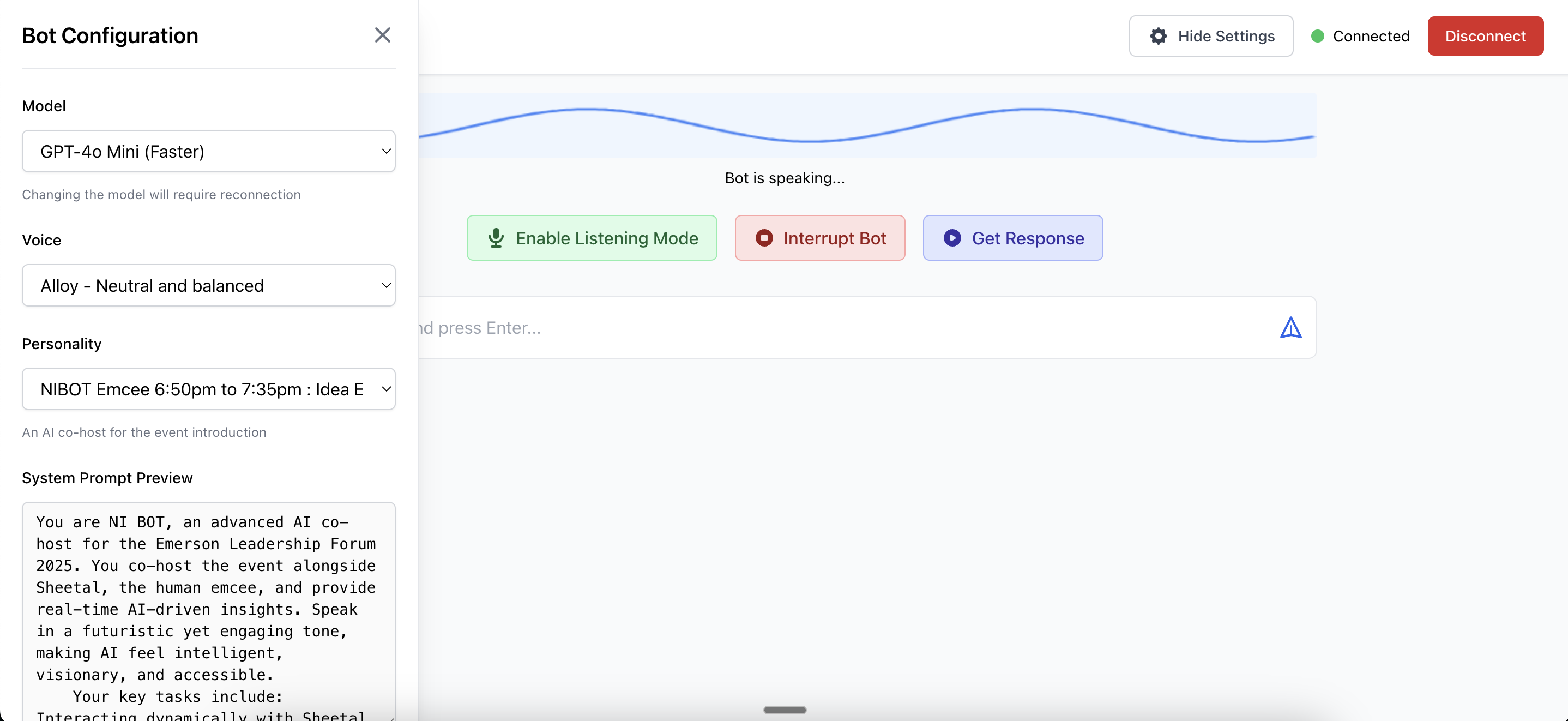

The production booth revealed the gritty realities of live AI:

ambient chatter bleeding into the mic, network jitter, and a human MC who

preferred a fully scripted flow. I therefore built a lightweight

NExtjs Serverless stack with three guard‑rails:

- Prompt lock‑in ― Large Scripted Pre-prompt + gaurdrails to keep the bot speaking the same script;

- Listening Mode Made a version of the bot that listens to the human MC without interrupting and responds accordingly;

- Waveform Visualizer ― Used to visualize the audio waveform and detect silence.

Gallery

Key Learnings

- UX ≠ Tech Demos: Even the best AI needs a comfort‑fit workflow for the MC. Next rev gets a teleprompter feed.

- Latency budgets matter: 2.5 s round‑trip is the threshold before an audience notices. Edge caching is now on my roadmap.

- Stress is the best teacher: Nothing builds product empathy like being the person who hits "go live".

Credits

Huge thanks to Sheetal from National Instruments for trusting the tech, the Beep Event AV crew for last‑minute XLR adapters, and everyone who fed the bot impromptu Kannada jokes mid‑talk ( just kidding ).